Memo Published May 30, 2025 · 5 minute read

What is the Artificial Intelligence Singularity?

Mike Sexton

Takeaways

- The singularity is the point where AI surpasses human intelligence and can improve itself better than humans can.

- The first AI that can perform any intellectual task a human can is known as artificial general intelligence (AGI)—and some experts believe it’s already here.

- Building superintelligence is risky. Letting China build it first is even riskier.

- Abundant superintelligence can radically improve every American’s life and aid us in tackling the world’s most urgent challenges.

What is the Singularity?

The term technological singularity refers to the point where AI surpasses human intelligence and can improve itself better than humans can.1 British mathematician I. J. Good first hypothesized the singularity in a 1965 paper, and today’s state-of-the-art AI is crossing the boundary from singularity theory into reality.2

In singularity theory, the first AI that can perform any intellectual task a human can is known as artificial general intelligence (AGI).3 Because AGI is as smart as any human, it will know how to improve upon itself as well as humans—if not better. So, AGI will quickly lead to artificial superintelligence (ASI) in a process known as the intelligence explosion. The singularity is the threshold where ASI emerges with intelligence that is beyond our current abilities.

The term “singularity” originates from astrophysics: the singularity is the event horizon around a black hole beyond which even light cannot escape its gravitational pull.

Theorists expect that ASI will transform all human endeavors in ways we cannot predict, developing solutions and innovations that we cannot imagine. Although generalized superintelligence does not exist yet, narrow superintelligence does.4 Google DeepMind has developed AlphaZero, an AI that can outperform any human at chess, shogi (Japanese chess), and Go (a strategy board game).5[5] It has also released AlphaFold, an AI that predicts how protein molecules fold—a process that used to require years of scientific experimentation.6

Although the singularity is philosophically profound, it will not necessarily announce itself. There is no expert consensus on what qualifies as AGI, and there is no guarantee one will ever come. However, the arrival of DeepSeek in January showed us that the intelligence explosion might unfold hand-in-hand with rapid worldwide proliferation. This will be a pivotal moment to strategically assess and align our policy positions and priorities.

Are we there yet?

Economist and AI expert Tyler Cowen believes that OpenAI’s ChatGPT o3 model qualifies as AGI.7 According to OpenAI president Greg Brockman, it is the first model that top scientists report producing “legitimately good and useful novel ideas.”8 Conservatively, there is an emerging consensus among experts that AGI should arrive at least by the end of the decade.9

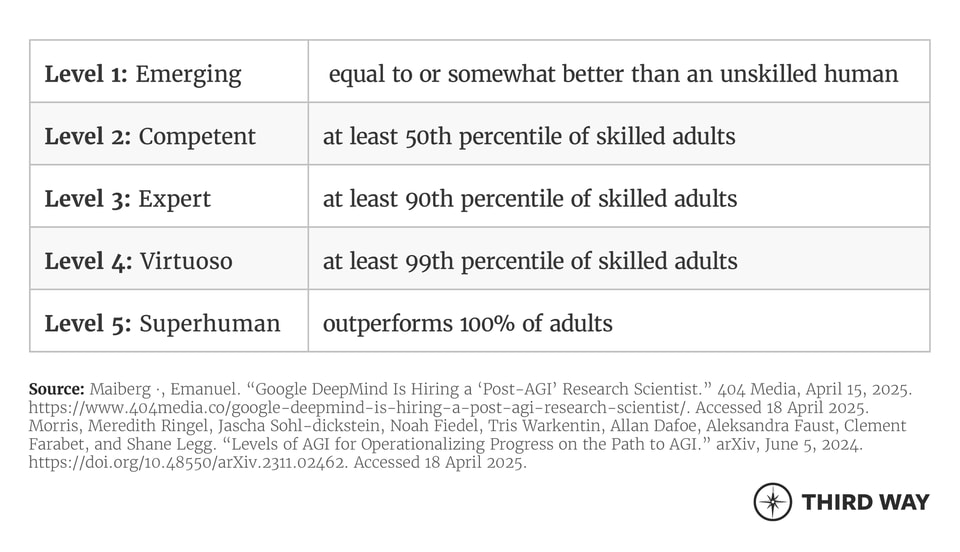

Google DeepMind, which is already hiring post-AGI researchers, has released a five-level framework to assess progress toward AGI:10

These levels have two dimensions: performance (depth) and generality (breadth). As the gaps in frontier AI models’ knowledge shrink, their performance graphs across these axes are jagged.11 In the areas where AI already evidently exceeds human abilities—like board games, protein-folding, translation, and summarization—models have leapt from human-level to superhuman-level performance instantaneously.

Yet some argue that AGI should not only include book smarts, but also the ability to understand, learn from, and physically act on the world around it. This is known as embodied AI, which encompasses fields like self-driving cars and robotics.12 As large language model development reaches maturity, we can expect the AI industry to pivot toward advancing the state of the art in humanoid robots—as Meta and Google already are.13

What do we do?

Assuming the singularity is either underway or imminent, we must adapt our strategies and agendas to meet the moments ahead. The campaign for a six-month pause in AI development failed, and the state of the art in AI advances apace.14

There is broad agreement in AI and national security circles, including Democrats like Michèle Flournoy, that maintaining this momentum should be a national policy priority. If American companies ceased work on AI development, companies in China and elsewhere would gladly fill the vacuum, risking ceding the future of AI to authoritarian control. That is why American leadership in AI, including open source, is a national security issue.

To meet this moment, lawmakers should adopt a broad-based AI agenda that does three critical things: advance, protect, implement:

- Advance AI development to maintain global leadership.

- Protect communities and individuals from AI bias and related harms.

- Implement AI innovations in practical ways that tangibly improve Americans’ lives.

If we believe AI cannot help us—whether as a country, party, or individuals—that will become a self-fulfilling prophecy. To benefit from AI, it is not enough to build it: we must find useful ways to put it to work. Whether or not AI serves humanity is not just a question of regulation but implementation.

China has achieved superpower status through a stable and centralized long-term technology-centric state planning. Although democratic governments are struggling to demonstrate the same strategic competence, it is the United States’ AI development ecosystem that led the world to the brink of the singularity. We’ll be better off working with it than against it.